When conferences end up in my back yard, I usually find a way to attend. Today, I’m at CamundaLocal Toronto, a one-day conference and workshop for Camunda customers, prospects and partners. Toronto is the hot spot for Canadian finance, and we can see the major bank towers from our perch at the top of the Bisha Hotel. I’ve worked for a long time as a process automation consultant in this region and there is really an overwhelming number of investment firms, bank, insurance and other related financial organizations within a short distance. Lots of representation from the big banks and other financials in attendance today.

Our host for the day is Lisa-Marie Fernandes, Camunda Strategic Account Executive, and after she introduced the day and gave some background on Camunda, we heard from Sathya Sethuraman, Field CTO, on the problems with siloed automation versus Camunda’s vision of universal process orchestration. Every technology company and CIO have been talking about the necessity of business transformation for years, and Sathya pointed out that process automation is a fundamental part of any sort of digital transformation. The problem arises with siloed automation: local automation within departments or even sub-departments, with no automated interaction between parts of the process. Although people in that department are convinced of the value of their local automation, customers deal with a much broader end-to-end process in order to do business with an organization. With siloed automation, that customer journey is fragmented and difficult, with the customer having to fill in the gaps of the process themselves through multiple phone calls, forms and emails, while re-entering their information and explaining their issues at each step. Contrast that with an organization that has end-to-end orchestration using a product such as Camunda to bind together these departmental processes into a full customer journey. The customer no longer has to deal with multiple processes (while trying to figure out what those processes are from the outside), and people inside the organization no longer have to make heroic efforts just to meet the customer’s needs.

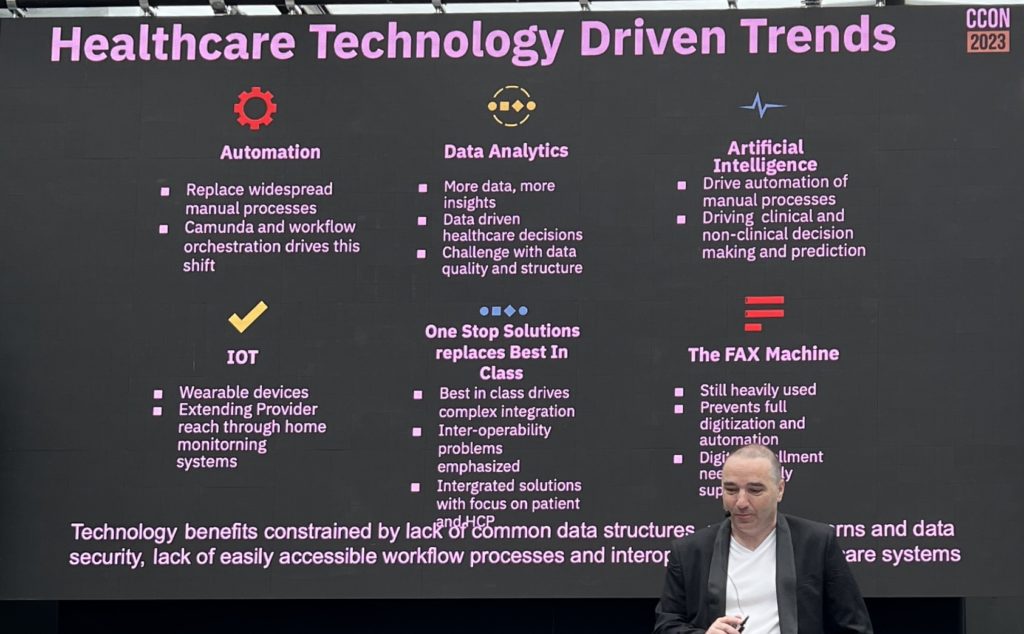

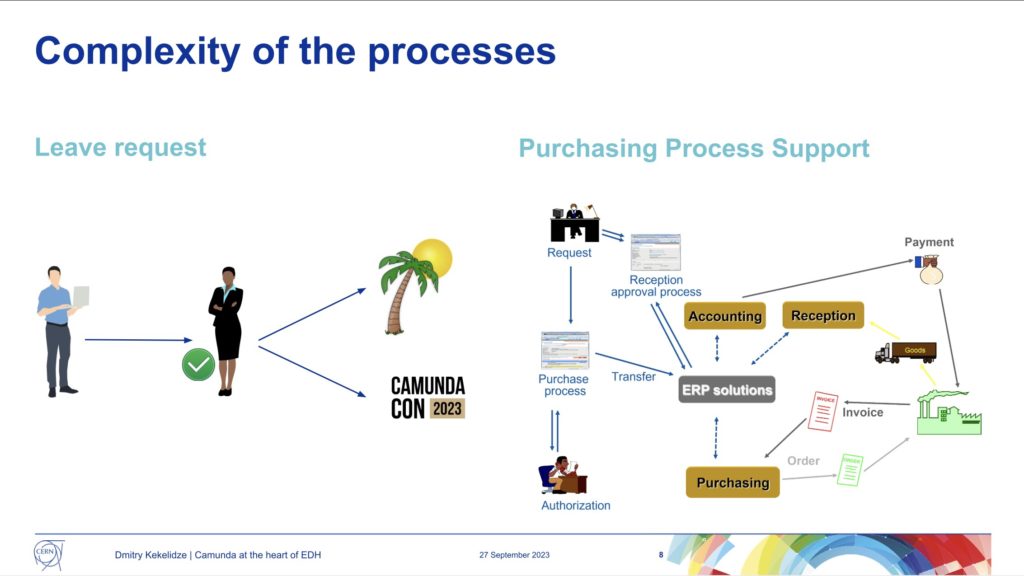

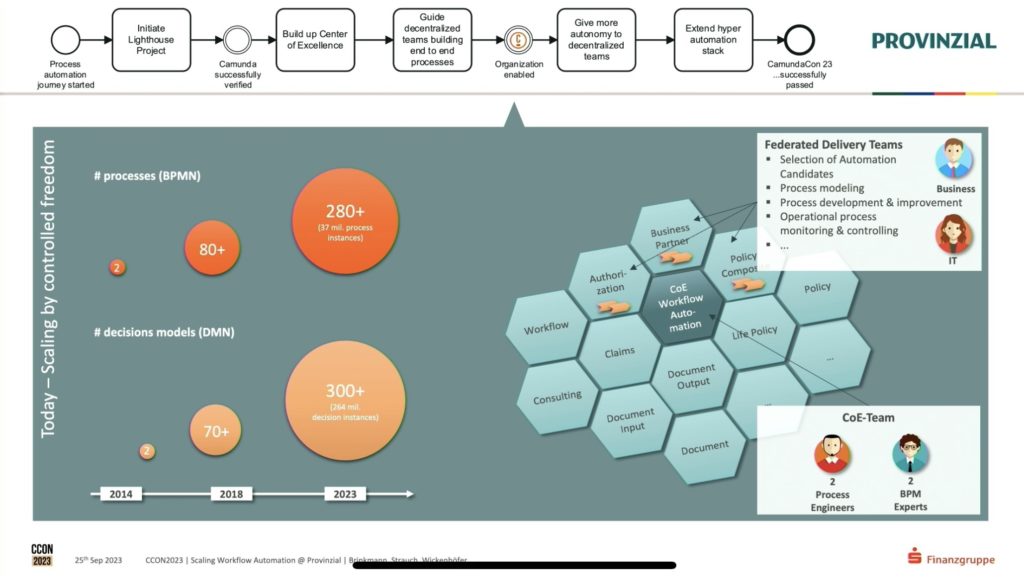

Sathya pointed out the risks of broken (or missing) end-to-end automation, including the increased complexity caused by multiple isolated processes, and the disconnect between business and IT due to lack of a common language for process automation. From an organization’s standpoint, there are issues with efficiency and quality of internal processes, but the bigger issue is that of customer satisfaction: if a competitor has a fully-automated end-to-end process that makes it easier for the customer, then many customers will choose that path of least resistance when they are choosing who to give their business to. This, of course, is not new. However, with the changes that we’ve seen in the past four years due to the pandemic, work from home and shifting supply chains, more streamlined and automated processes have become a true competitive differentiator. He had an interesting slide showing a heatmap of the many process orchestration opportunities just in the consumer banking value chain: probably 50-60 distinct processes across customer management, retail, lending, cards, payment, risk, finance and accounting, and corporate management where many financial organizations have room for improvement.

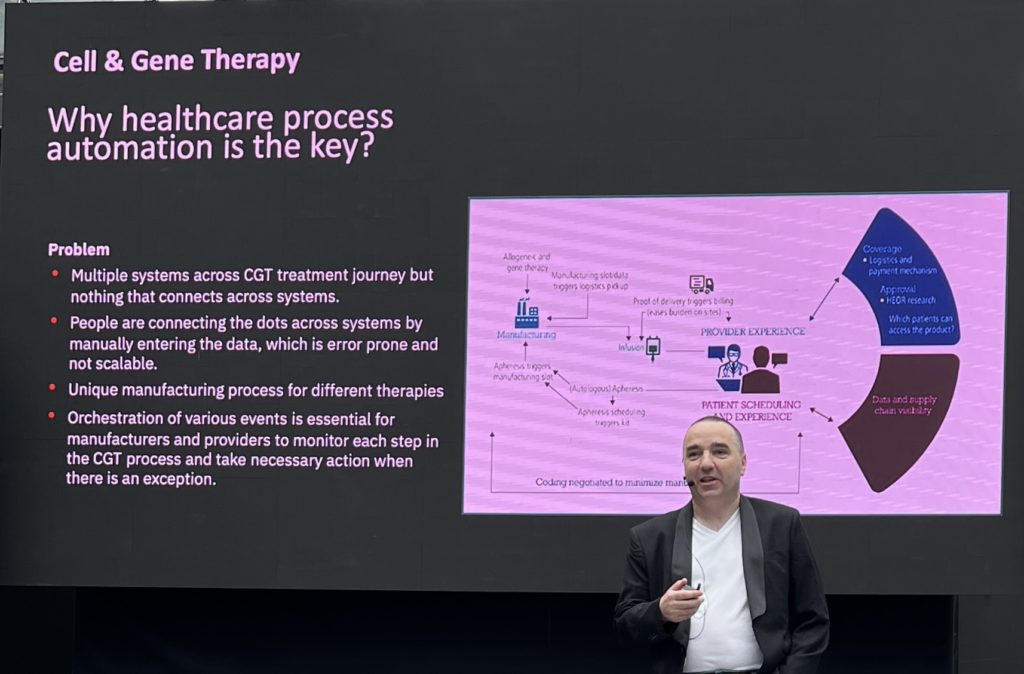

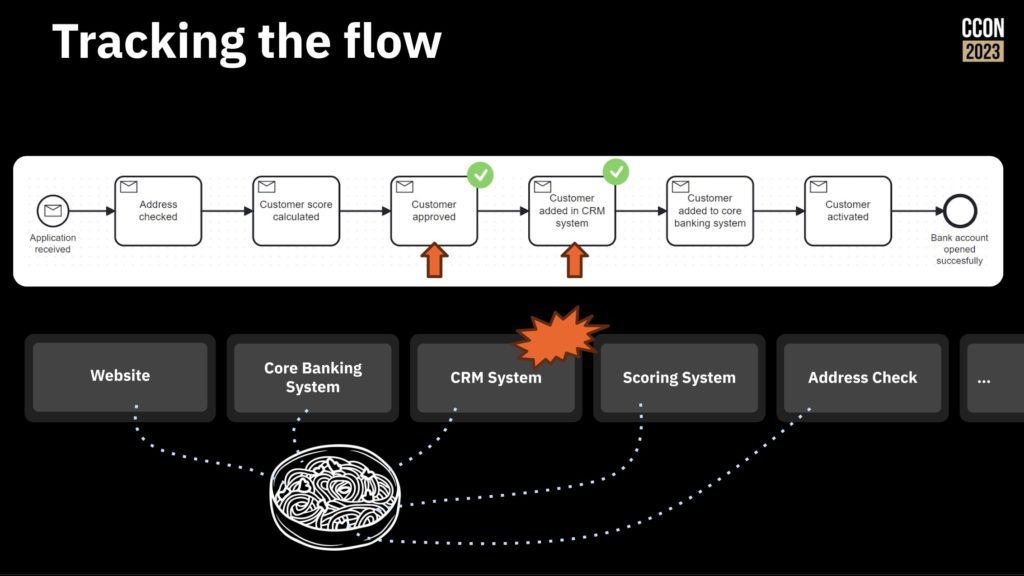

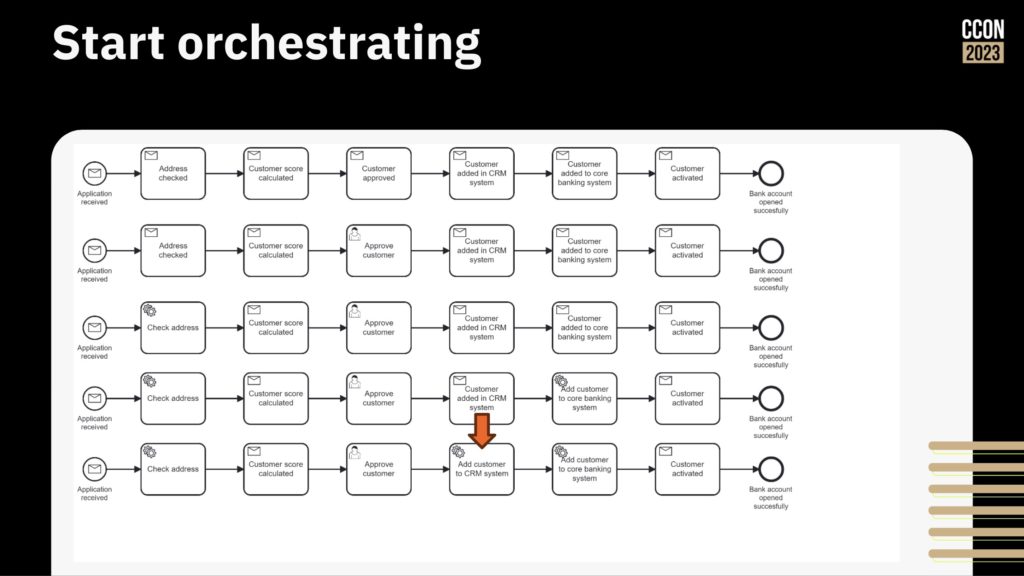

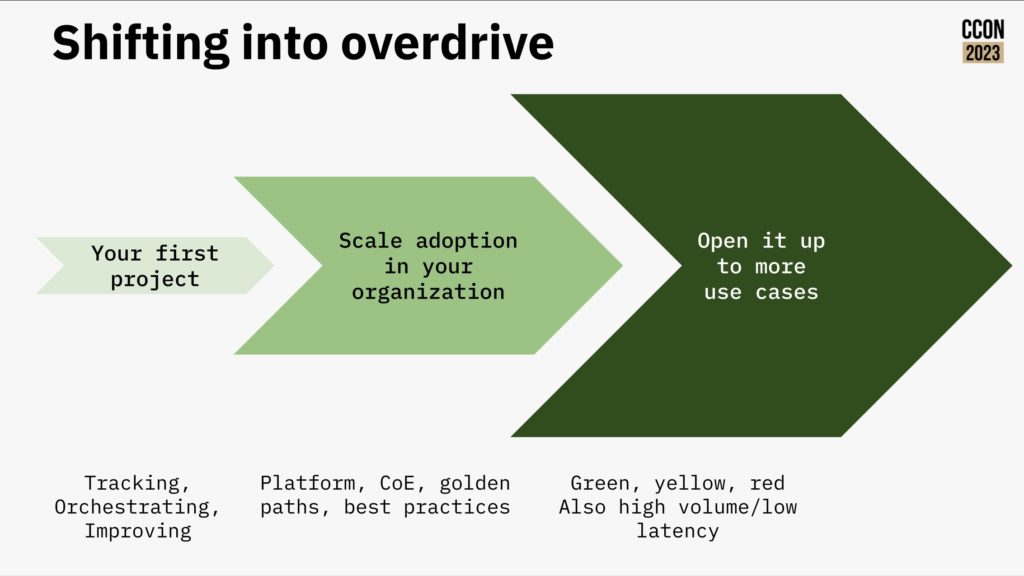

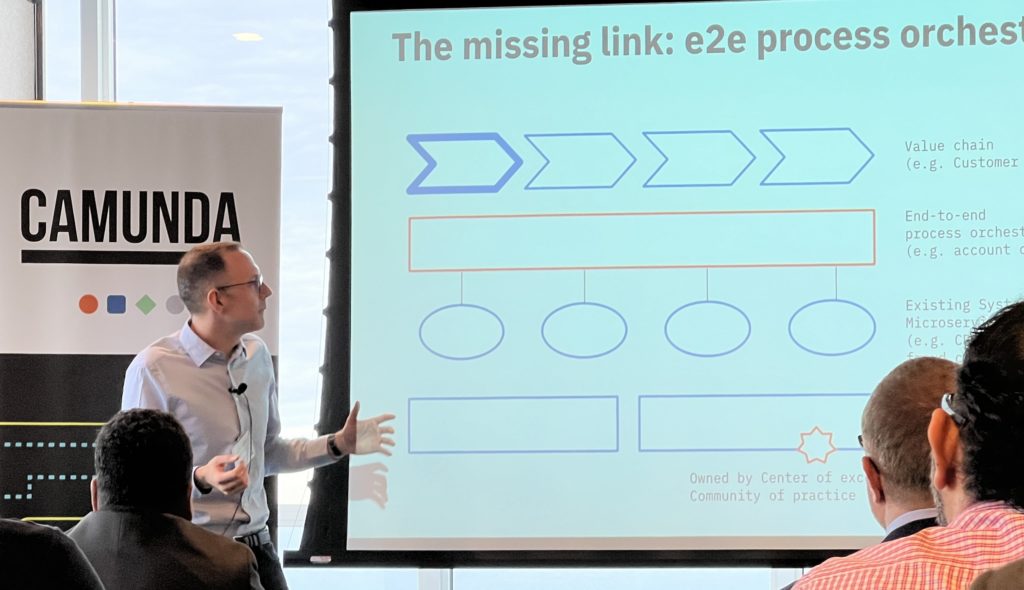

Daniel Meyer, Camunda CTO, then gave us a product roadmap update. He kicked off with a view of the common reality of most large organizations, echoing Sathya’s points about islands of automation without end-to-end process orchestration. Many companies focus on improving the local automation — making their core banking systems better, for example — without considering how this is making the customer journey worse because there’s no integration or linkage between these disparate systems. Opening a bank account as a new customer? There are likely different systems and processes for know-your-client checks, credit checks, customer onboarding and account opening; when one isn’t connected to the others, the customer experiences delays and an increasingly negative view of the organization as they struggle to get the new account open. The missing link is end-to-end process orchestration, and this is the focus that Camunda has defined for their product over the past few years.

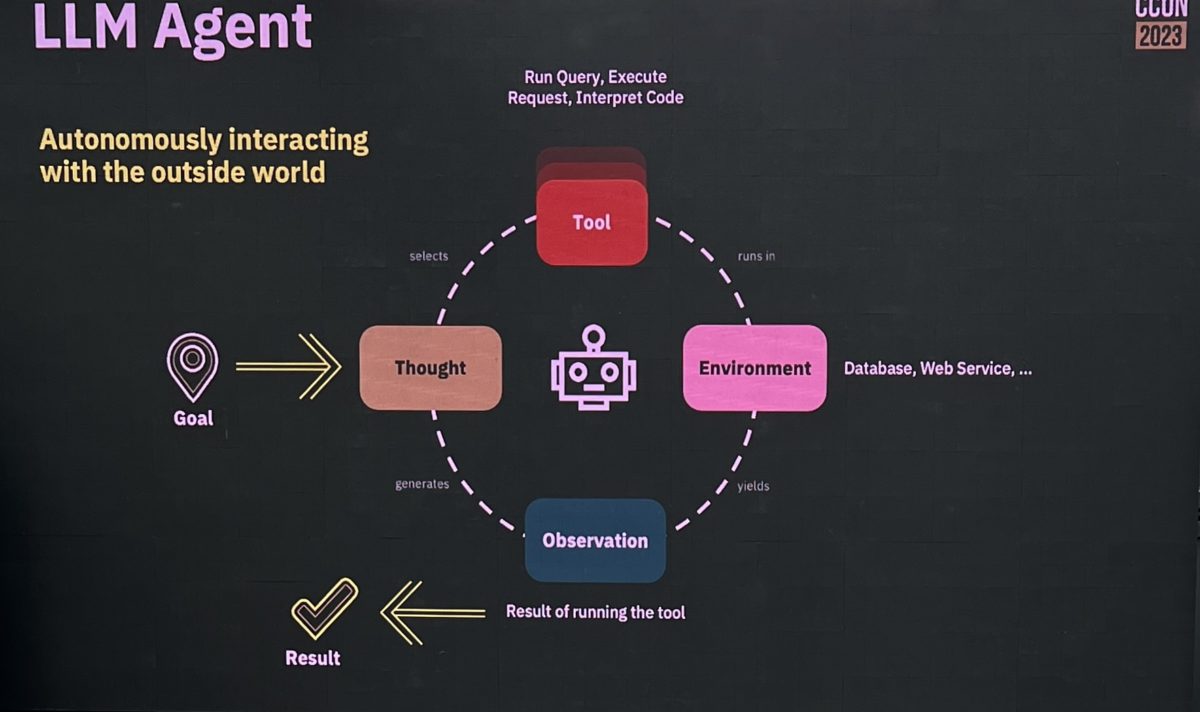

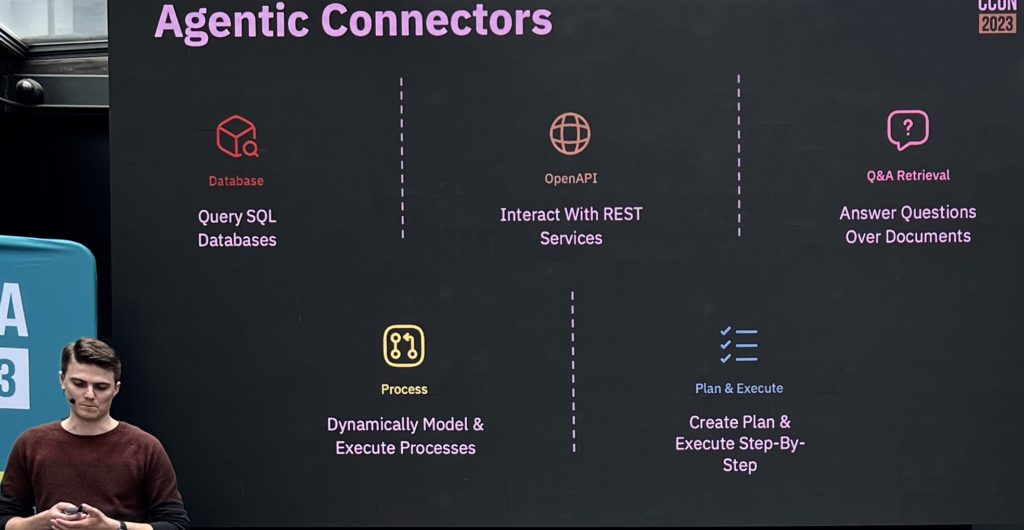

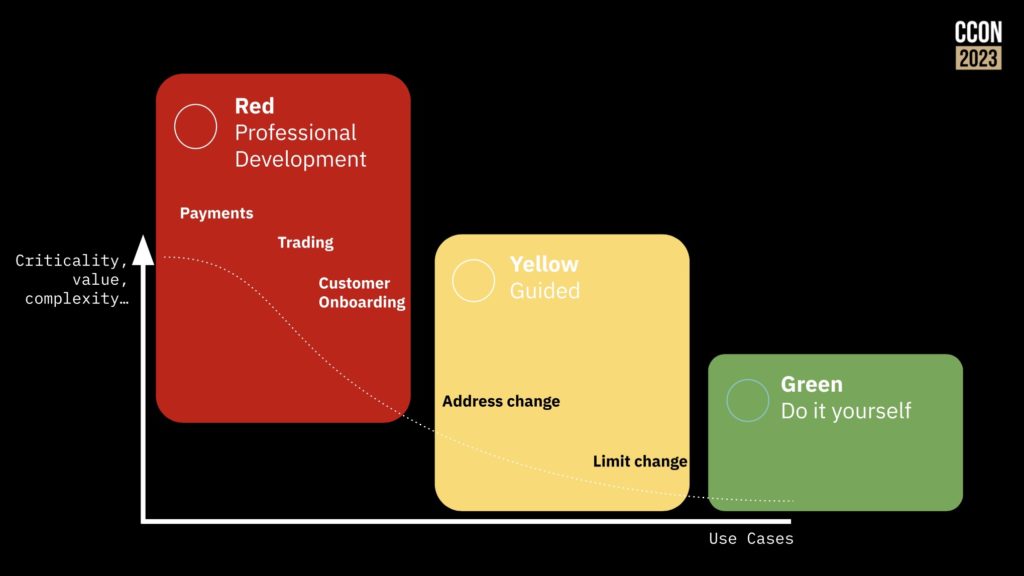

The challenges of end-to-end orchestration include endpoint diversity — it’s more difficult to integrate a heterogeneous set of endpoints since they have different interfaces and may be at different levels — and process complexity that goes beyond a simple sequence of steps. He showed where some of the customer use cases live on the endpoint diversity versus process complexity graph, and the sweet spot for Camunda in the top right quarter of that graph, where processes and the system integrations are both complex. Of course, those are also the mission-critical processes that are controlled by IT, even though business people may be involved in the design and requirements. In this context, their vision is Camunda as the universal process orchestrator: providing the capability of complex process flows as well as being able to integrate in human work, AI, business rules, microservices, RPA, IoT and APIs of all types. That being said, they still have quite a developer-centric bias.

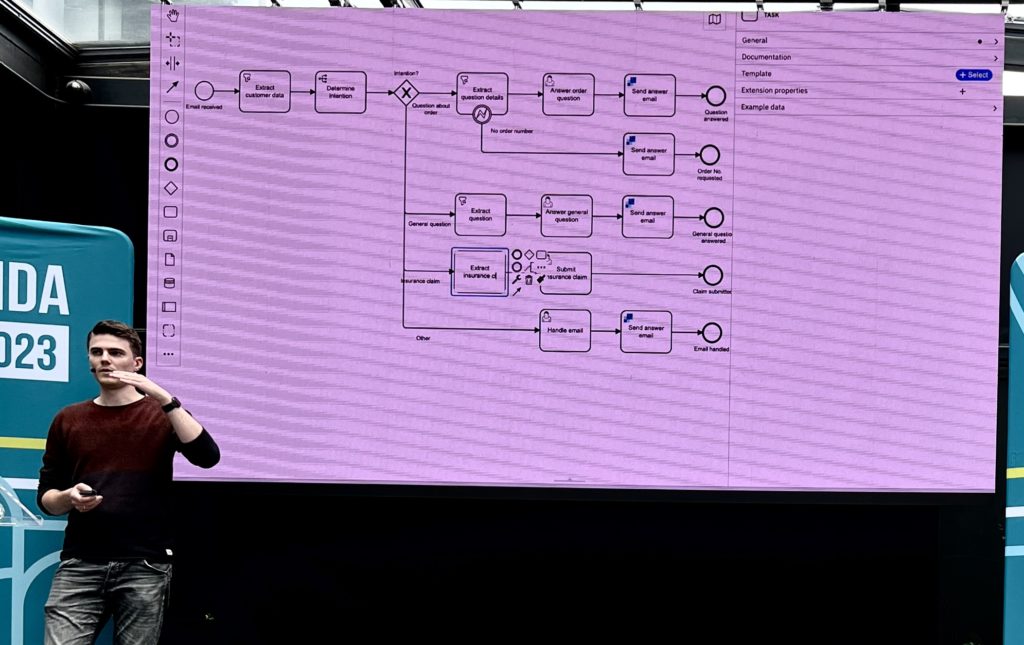

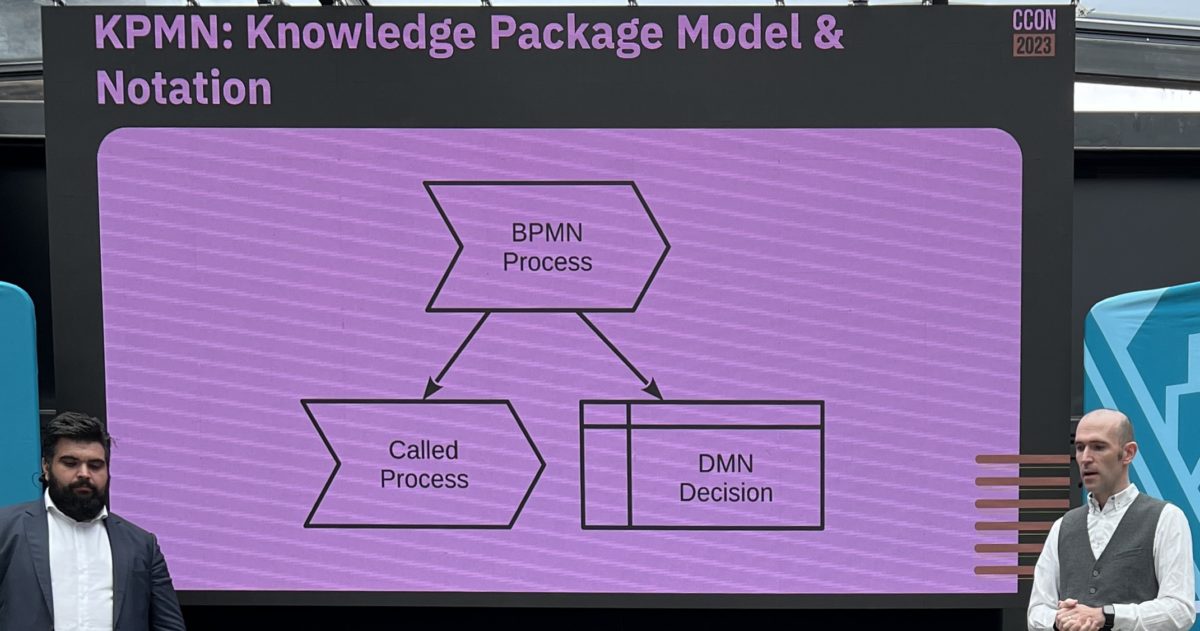

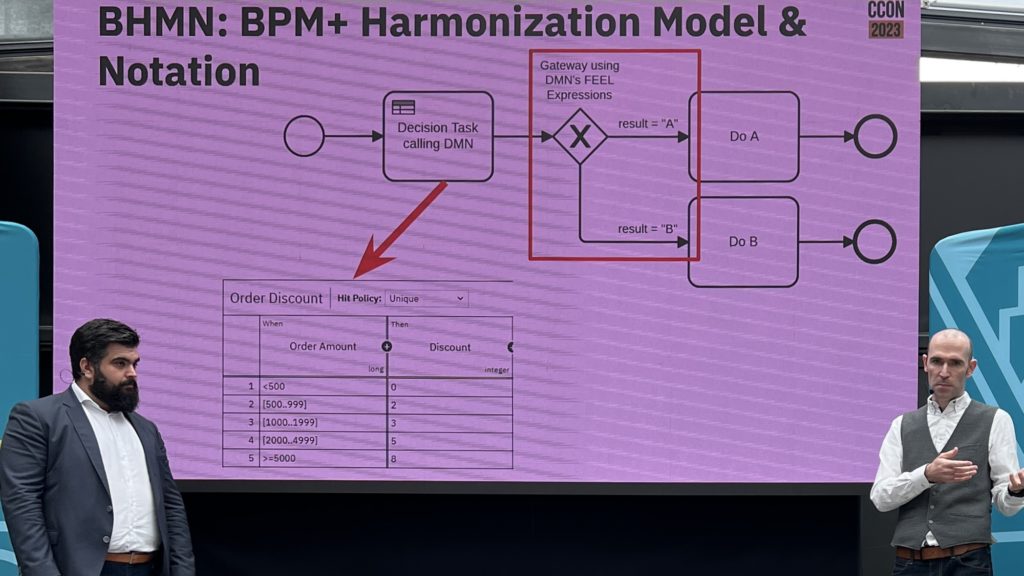

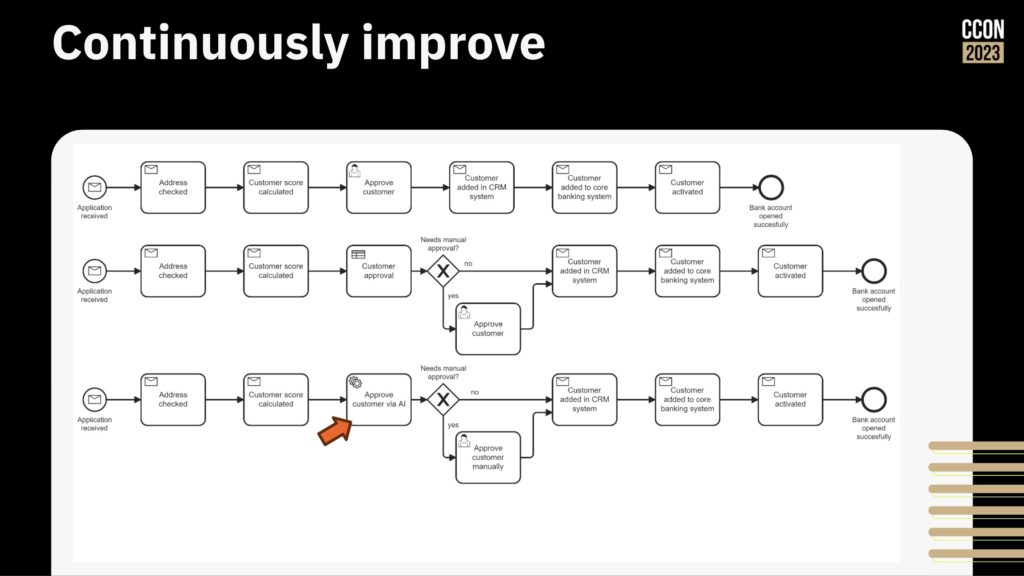

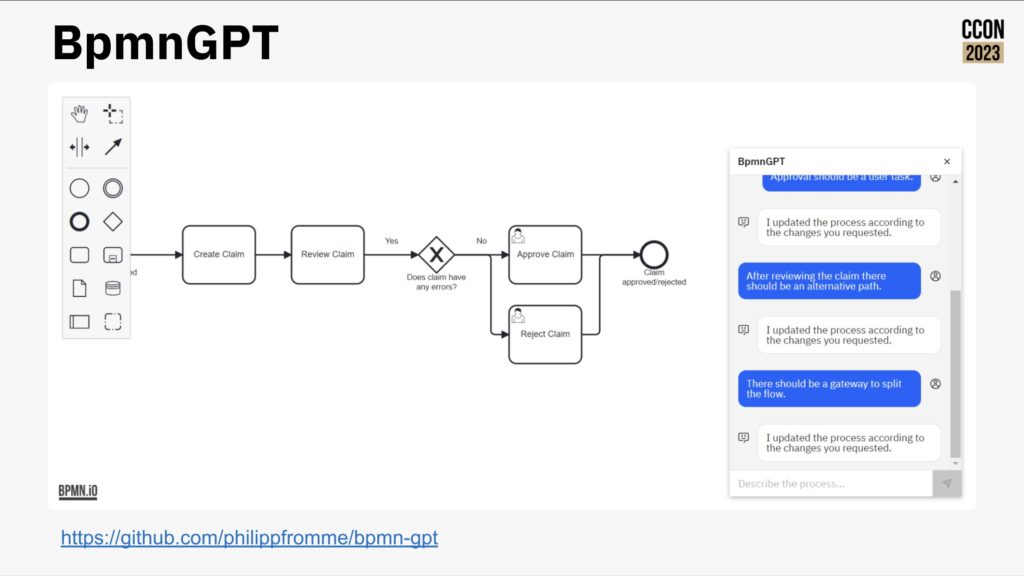

Daniel spent a bit of time on some of the terminology and usage: process orchestration versus business process management (essentially the same when you’re talking about the automation side of BPM, and Camunda also uses the term “workflow engine” as the core of process automation), and who within an organization creates BPMN diagrams. This was a pretty technical audience, and there was a bit of discussion on the value of BPMN once you got down to the level of details that a non-technical process owner isn’t going to be looking at. I believe it’s still incredibly valuable as a graphical process-oriented development language even for those models that are not directly viewed by the business people: it provides a level of functionality as well as development guardrails (e.g., model validation) that can accelerate development and increase reusability while making it more accessible to less experienced developers.

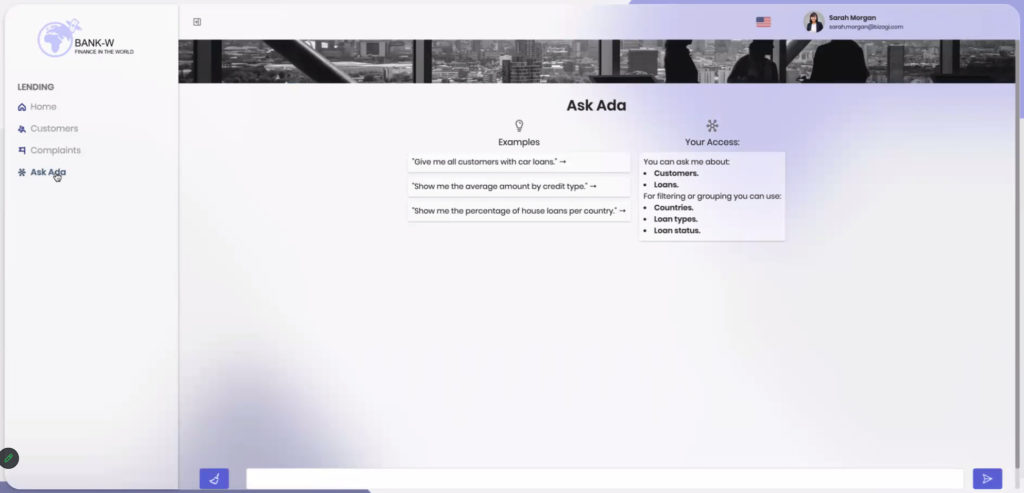

Camunda, of course, is only one piece of the entire process improvement cycle, albeit a critical core piece of the automation. Most customers are also using some combination of process mining, more advanced business modeling, and business intelligence; these essential activities of discovery, design and analysis/improvement fit around Camunda’s process automation and low-code integration offerings. Some automation technologies, including AI, RPA and an event bus, are not part of the core Camunda platform but easily integrated to allow the best of breed components to be added. And although Camunda has recently provided a some front-end UI capabilities, it’s pretty rudimentary forms and most companies will be integrating Camunda into their existing application/UI development environment.

Daniel took us through the investment focus that Camunda has for product development in the areas of developer productivity, AI/ML, collaboration, business intelligence, low-code and universal connectivity. He also highlighted the availability of connectors in the Camunda Marketplace that can be pulled in and used in any model for additional connectivity beyond what is included in the out of the box product.

Camunda has a regular cycle of releases every six months, and he gave us a quick overview of what’s coming up in 8.5 in April, 8.6 in October, as well as future plans for 8.7 and beyond. This is all for systems based on the Zeebe architecture (V8+); not clear from this presentation what, if anything, they are doing for customers still on V7 aside from encouraging and assisting with migration to V8. As with many vendors that completely replatform, migrations to the new platform are likely much slower than they anticipated, although with the added complication that they are leaving behind their open source legacy with the shift to their modern Zeebe engine.

After lunch, there was a short customer presentation by Norfolk & Dedham insurance, who are using Camunda 7 and Spring Boot as an embedded engine invoked from their own UI portal for managing claims. They customized the Camunda Modeler to integrate their own applications and data sources, in order to accelerate development. In addition to the usual benefits from process automation and integration, the data generated by process instances is hugely valuable to them when looking at how to translate activities to more strategic actions when planning future improvements to their business operations. Now that their claims applications are in advanced testing stages, they are expanding the same technical stack to their underwriting applications, and are seeing a significant benefit from the reusability of components that they created during the claims project. They aren’t yet planning a V8 migration, although are very cognizant that this is likely in their future.

Following the customer presentation, Gustavo Mendoza, a Camunda senior sales engineer, gave us a technical demo of the V8 product stack to expand on some of the points that Daniel Meyer went through in his presentation earlier. We saw demos of the process and decision modelers, including the collaboration features, the UI forms builder for task handling, then the execution environment to see the user interaction with a running process as well as the Operate monitoring portal and integration with Slack.

The remainder of the afternoon was split into a hands-on live coding workshop for customers, and a partner workshop. A full and worthwhile day if you’re in the Camunda ecosystem.

Camunda has a couple of other CamundaLocal events coming up soon in Chicago and San Francisco, plus their main European conference in Berlin in May and their North American conference later in the year (September in New York, I think).